Screen Readers vs Voice Control: How Users Navigate the Web

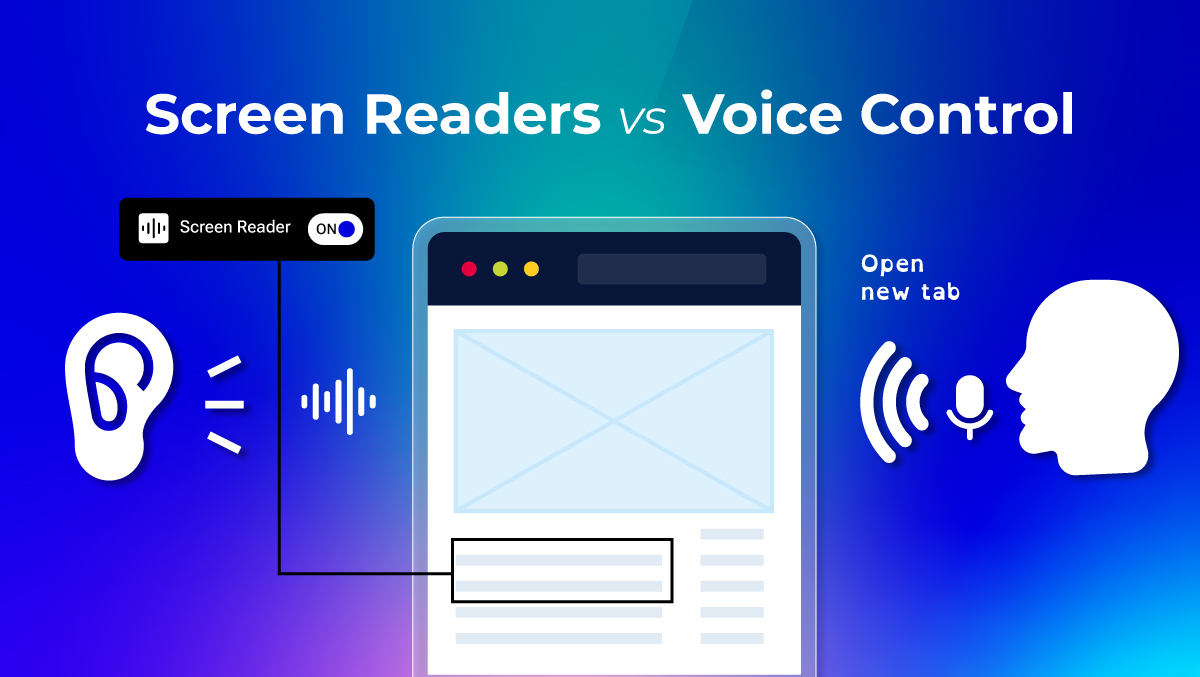

For many people with disabilities, access to the Internet is dependent on assistive technology. Screen readers and voice control are two of the most common forms.

While these two types of assistive technology serve entirely different purposes, both are essential. At the same time, the rise of voice assistants, like Siri, Alexa, and Google Assistant, indicates that the need for hands-free access to the digital world is also on the rise.

This guide will explain how each technology works, its strengths and weaknesses, and what developers must consider when creating digital spaces that include everyone.

What are Screen Readers and How Do They Work?

Screen readers are software that transforms digital content into speech or Braille, helping individuals who are blind or have low vision access websites and applications.

Users use keyboard shortcuts to navigate instead of moving with a mouse through headings, links, and landmarks.

How do Screen Readers Work?

Screen readers read directly from the web page's HTML structure. Important information for screen reader software includes alt text, form labels, and ARIA roles.

These elements help the software understand how to describe parts of the document, like images or input fields. Without them, users would face severe barriers.

The WebAIM Screen Reader User Survey highlighted that 76% of screen reader users "always or often" navigate by headings. This demonstrates the importance of structured design for navigation to help support accessibility.

JAWS, NVDA, VoiceOver, and TalkBack are the most well-known tools supporting non-visual web access.

What is Voice Control and How Does It Work?

Voice control allows users to navigate and interact with their device using voice commands instead of a keyboard or mouse. It makes devices accessible to people with motor disabilities, and people without impairments may use it for convenience or hands-free access.

How does Voice Control work?

Voice control applications typically rely on speech recognition to process commands, such as "scroll down", "click link", or "open settings". Voice recognition recognizes and maps the command to a device or website function.

The latest innovative voice control tools have built-in support for natural language, so a user can say, "Search for hotels near me" instead of placing an order.

Some standard tools include Dragon NaturallySpeaking, Google Assistant, Siri, and Alexa. Voice control functions are widely available on desktop or laptop computers, mobile devices, and smart devices.

Screen Readers vs. Voice Control: Direct Comparison

Screen readers and voice control are effective access tools, but they have different accessibility needs. Screen readers provide non-visual users access to digital information, while voice control allows interaction without hands.

The table below shows the differences side by side:

| Aspect | Screen Readers | Voice Control |

| Primary Purpose | Converts on-screen text/UI into speech or Braille for blind or low-vision users | Let's users control devices and navigate the web using spoken commands |

| Main Users | People who are blind, have low vision, or have reading disabilities | People with motor impairments, multitasking users, or those preferring hands-free interaction |

| Input / Output | Keyboard input, speech, or Braille output | Voice input, visual, and auditory feedback |

| Navigation Style | Structured: jump by headings, links, landmarks, lists | Conversational: natural language commands (“click submit,” “scroll down”) |

| Strengths | Precise, efficient with shortcuts, reliable across platforms | Hands-free, natural interaction, useful on mobile and smart devices |

| Weaknesses | Depends on well-structured HTML; struggles with poorly coded sites | Speech recognition errors, discoverability limits, and privacy concerns |

| Popular Tools | JAWS, NVDA, VoiceOver, TalkBack | Dragon NaturallySpeaking, Google Assistant, Siri, Alexa |

| Best Use Case | Reading articles, filling forms, and structured navigation | Quick searches, mobile browsing, controlling devices hands-free |

The comparison indicates the two are not in competition, but are complementary tools. Each provides access to a separate accessibility problem, expanding access to more user groups.

Screen Readers: Strengths & Weaknesses

Strengths:

- Screen readers offer an organized perspective on web content whenever the code is marked correctly.

- They allow fast navigation by offering modes that skip by headings, skip by links, or skip by fields within forms.

- Screen readers are well-established and recognized tools, and developments happen regularly on the desktop app versions and the tablet + phone app versions of screen readers, like JAWS, NVDA, VoiceOver, and TalkBack.

Weaknesses:

- Screen readers depend on correctly coded HTML, descriptive alt text, and proper ARIA roles. If the HTML is poorly coded, then the website's structure may be confused, or it may be impossible to navigate.

- IPS firms or organizations that do not provide technology training make it challenging for new users, as their learning curve is steep and there are a lot of commands and shortcuts to learn.

- If the sites you browse are not cleanly coded, not designed for accessibility, or poorly designed, browsing can be generally slow.

Screen readers are advantageous learning tools for their users, but their utility is driven by the extent to which website owners and coders develop their sites by following accessibility standards.

Voice Control: Strengths & Weaknesses

Strengths:

- Allows hands-free access, which is essential for those with motor impairments.

- Uses natural language commands, which makes it easier for many users to get started quickly without learning key sequences.

- It works well for mobile devices, intelligent assistants, a mouse, and a keyboard, but it is less practical.

Weaknesses:

- Accuracy may suffer when dealing with heavy accents, ambient noise, or specialized vocabulary and jargon.

- Some features may not be discoverable as users are unaware of available features.

- Users may have privacy issues because many systems will process speech data through cloud resources.

Voice control is dependable for fast, efficient use, but not very precise when screen readers are required to explore documents or complex websites.

When Each Works Best

Screen readers and voice control address different scenarios, and are not interchangeable.

- For content-heavy, screen readers, structured tasks such as reading lengthy articles or filling in forms, a screen reader is more appropriate, especially if navigating sites that adhere to semantic HTML. They offer users granular control of information.

- Voice control typically works best for commands that can be described with one word, like searching, opening links, and scrolling through a page. It is beneficial for mobile conditioning or when you cannot use your hands.

They can also complement each other. For example, a user could use voice control to issue a command while a screen reader provides spoken feedback. Hybridizing these uses has become more feasible with the advances of AI tools.

How Accesstive Supports Screen Readers and Voice Control

Accesstive is an AI-powered accessibility tool that supports websites' better functioning with assistive technologies.

- Accesstive is an AI-powered accessibility platform designed to improve how websites work with screen readers and voice control technologies.

- For screen reader users, Access Audit helps identify issues such as missing headings, alt text, or semantic structure that can block navigation. These findings support teams in ensuring content is properly marked up and usable with assistive technologies.

- To maintain accessibility as sites evolve, Access Monitor tracks changes over time and helps detect regressions that may affect screen reader or voice-based interaction.

- User-facing adjustments through Access Widget allow users to adapt visual and interaction settings, improving readability and usability alongside assistive tools.

- For voice control users, Access Accy supports clearer interface labeling and consistent navigation patterns, improving the reliability of commands such as “click button” or “open menu” through conversational guidance.

- When deeper validation or remediation is required, Access Services combine automated insights with expert reviews to ensure accessibility improvements are accurate, effective, and aligned with accessibility standards.

Conclusion

All tools offer a unique strength along with shared strengths, and they can only each do their work when the websites they are designed to work with are built with accessibility in mind.

Screen readers equip blind and low-vision users with comprehensive access to digital content. Meanwhile, voice control will allow hands-free navigation for those with motor disabilities or others who find it easier to use spoken commands.

If you want to find out how your site does for voice control and screen readers, you can start with a free accessibility scan on Accesstive. Conducting an accessibility scan is a quick way to uncover access barriers and the first step toward a more inclusive digital experience.

As the accessibility industry continues to evolve, the future may be one where additional integration will move even more toward including voice control and screen reading.

FAQs:

Yes, you can use both. Voice control lets you give commands, while the screen reader reads out what's on the screen. A little setup is needed, but they can work together smoothly.

Screen readers can get things wrong if a website isn't coded properly. They need a clear structure (like headings and roles) to read things in the right order. Without that, the content might be read out of sequence.

Test a website without a mouse. Use a screen reader to navigate headings and links, and voice control for commands like “scroll down” or “submit form.” If basic tasks are hard, the site may have accessibility issues.

Yes, there are privacy concerns. Voice control systems send your speech to cloud servers, so your voice data may be stored or reviewed. Check privacy settings to turn off unnecessary data sharing.

It can. Accessibility features like ARIA and extra markup may slightly impact load time. However, a well-optimized site balances both. Poor coding or heavy scripts usually cause more slowdown than accessibility features.